Let us read on how AI and algorithm reflect human biases and stereotypes in today’s tech environment.

Artificial Intelligence and algorithms direct our lives today – from paying bills via apps to booking movie tickets online to measuring heart rates to

Artificial Intelligence and algorithms direct our lives today – from paying bills via apps to booking movie tickets online to measuring heart rates to something as simple as ordering groceries. Almost every human process can be imitated by AI today. The very role of AI is to augment human tasks and potential; when AI and algorithms that we use daily begin to reflect human biases and stereotypes — we are in trouble.

People are error-prone and biased, yet that does not imply that algorithms are essentially better. The tech is now settling on important decisions about your life and conceivably administering over which political advertisements. Also, how your application to your dream job is screened, how cops are sent in your neighborhood, and even anticipating your home’s risk of fire.

Yet, these frameworks can be biased depending on who assembles them, how they are created, and how they are ultimately used. It is generally known as algorithmic bias. It is challenging to make sense of precisely how frameworks may be defenseless to algorithmic bias significantly since this technology often regulates in a corporate black box. We usually do not know how a specific artificial intelligence or algorithm was planned, what information helped assemble it, or how it works.

What are AI and Algorithm

Source: unsplash.com

An algorithm is mainly a set of instructions like a rigid, preset, coded formula executed when it experiences a trigger. In other words, algorithms are shortcuts that help us give instructions to PCs. An algorithm guides a computer what to do next with an “or,” “and,” or “not” statement. As most things are identified with mathematics, it starts pretty simple yet becomes infinitely complex when expanded.

Artificial Intelligence (AI) is an incredibly expansive term covering a heap of AI specializations and subsets. It is a gathering of algorithms that can modify its algorithms and make new algorithms because of learned inputs of data rather than depending entirely on the data inputs it was intended to perceive as triggers. This capacity to modify, adjust, and become dependent on new data, is depicted as “intelligence.”

Algorithms Imbibe Human Biases

AI algorithms are trained to comprehend, suggest, or make predictions dependent on huge amounts of historical data or ‘enormous data.’ Like this, AI and machine learning are just tantamount to the data they are trained on.

In any case, data itself is frequently loaded with biases. For instance, data used to prepare AI models for prescient policing has a lopsided representation of African-American and Latino people. In other words, algorithms imbibe the political, racial, social, and gender biases that exist within humans and in society.

Historical Data and Definition of Success in Algorithms

To build an algorithm, one needs two things basically: Historical data and a definition of success.

The definition of success relies upon the organization building the algorithms. Each time we build an algorithm, we minister the information, we define success, and we implant our qualities in it.

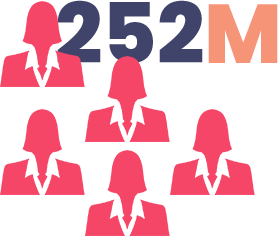

For an AI model that has been trained to go through historical data of work in the engineering field, the top contender for an engineering job would be men. While the algorithm might be fruitful in distinguishing the leading competitors, it overlooks how the data has an over-representation of males.

Accuracy v/s Fairness

Another vital factor to watch is who is building the algorithms and who chooses how it is conveyed.

This leads us to the subject of what the specific motivation behind algorithms is.

Algorithms are not objective, however, intended to work for the developers of the algorithms. This is the reason why an algorithm may prevail in its relegated task yet may not be reasonable or just to all the individuals it impacts.

Source: pexels.com

“Fairness” is certifiably not a legitimate metric that an algorithm can definitively gauge.

For the builder of the algorithm that is intended to recognize the best upcoming architect among vast amounts of resumes and employment data. However, chronicled gender imbalance may not be an issue that the AI model would be trained to illuminate.

Black Box – Algorithms and Accountability

Most proprietary algorithms are black boxes. At the same time, complex AI algorithms process bounteous measures of granular data on various aspects of our lives, we know almost nothing about how they work.

However, these models are used to settle on choices about our lives that are opaque, unregulated, and uncontestable. It is even when they are off-base.

Huge tech organizations surround the issue as one that they are equipped to comprehend (with the utilization of more AI).

Will this Algorithmic Bias Change?

Machines can work from the data. We decide to take care of them.

Algorithms become familiar with another dialect, and in fact, by processing a gigantic measure of information. Thinking back on the last hardly any hundred years, we will see that the voices ruling the universe of literature, craftsmanship, theory, and science in the western world were male, white, and Western. As of not long ago, it was straight white males who administered the automated tech industry as well.

It bodes well that they never saw the inalienable bias discovered by the researcher, Joy Buolamwini. They had never thought (or had the need) to look.

Thus, after some time, these tech-based recruiting devices will probably improve and, ideally, screen candidates liberated from any oppressive bias. However, until the innovation has culminated, employers should find a way to ensure that individuals of protected classes are not lopsidedly screened through employments of tech-based recruiting algorithms.

Resource – thequint.com

Read more on Artificial Intelligence here